Optimise web scraping

Resource optimisation with JSON-based web scraping rules for static and dynamic websites

The successful use of generative AI depends heavily on the data basis. This should be tidy, structured and complete. Irrelevant and duplicate content leads to poorer results in response generation.

Web scraping options can be set in the moinAI Hub for reading web resources. This ensures that relevant content is read and irrelevant content is excluded. This guarantees a tidy and concrete data basis.

The Customer Success Team will be happy to advise you on optimisation and assist you with implementation. The following describes how web scraping options can be stored and which options are available.

Store web scraping options

The web scraping options can be edited at resource level and bot level. Both ways are described below.

Set centrally for all resources

Web scraping options for all stored and future resources are stored centrally via the menu item Bot Settings -> General settings & Privacy in the General Web Scraping Options area.

Custom header settings are also possible. The Customer Success Team will be happy to advise you on the implementation.

Set for individual resources

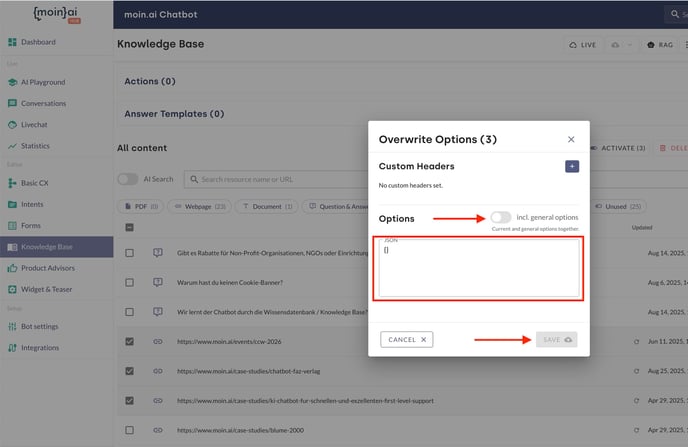

Web scraping options for individual resources are saved via the Knowledge Base menu item. To do this, click on Options for each individual resource using the three-dot menu at the bottom right of the resource.

A window will then open in which the web scraping options are stored.

Incl. general options button

- Disabled: Only the stored JSON code affects the resource.

- Enabled: The web scraping option in Bot Settings > General & Privacy also affects the resource.

JSON code is inserted into the field. Click Save to apply the changes. All affected resources are re-loaded. This process takes a few minutes.

Bulk adjustment

If you want to adjust web scraping for several resources at once, bulk adjustment is the way to go.

1. Select all resources:

- In the Knowledge Base menu, set ‘Rows per page: all’ at the bottom right.

- Then select all resources by ticking the box at the top left at the beginning of the resource list.

2. Select multiple resources

- Select individual resources by clicking the checkbox on the left.

- Once you have selected the desired resources, click the Options button in the top right corner to open a window where you can define the web scraping options.

Incl. general options button

- Disabled: Only the stored JSON code affects the resource.

- Enabled: The web scraping option in Bot Settings > General & Privacy also affects the resource.

JSON code is inserted into the field. Click Save to apply the changes. All affected resources are re-loaded. This process takes a few minutes.

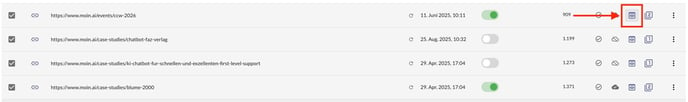

Click the eye button to the right of a resource to check whether the relevant content has been imported correctly.

Available web scraping options

The following describes some web scraping parameters for expert mode when adding resources/sources to the moinAI Knowledge Base. This article describes how to add a resource.

These settings help to control the specific parts of the website content that should be included or excluded from the extraction process.

- includeTags: This option allows you to specify which HTML tags should be included in the output. For example, if you only want to extract content within the

<p>and<h1>tags, the specification is: [‘p’, ‘h1’]. - excludeTags: This option allows you to specify which HTML tags should be excluded from the output. For example, if you want to remove all

<script>and<style>tags from the extracted content, the specification is: [‘script’, ‘style’]. - onlyMainContent: This parameter ensures that only the main content of the web page is returned, without headers, navigation bars, footers and other non-essential elements. It is useful for extracting the core information of a web page without additional ballast. Sometimes the parameter can be too restrictive. If important content is missing from the page, the parameter can be set to ‘false’. The default setting is ‘true’.

Example: Option to retrieve page content without the ‘header’

{

"onlyMainContent": false,

"excludeTags": [

"header"

]

}

Scrape complex dynamic websites

Scenario: ABC Company has an FAQ page (https://abc-unternehmen.com/de/faq). The answers are only loaded after clicking on the respective question. In addition, the categories change dynamically after each click.

Normal scraping of the website is not possible in this case. With moinAI, AI actions can be executed to map this behaviour. This simulates a click on the category. All questions are then opened with a click.

Since the change of category is triggered after the click and the questions and answers are reloaded and overlaid, a separate resource must be created in the knowledge base for each category.

Example JSON: For the general FAQ website

"actions": [

{

"type": "executeJavascript",

"script": "document.querySelectorAll(\"div.cursor-pointer.justify-between\").forEach(element => { element.click(); });"

},

{

"type": "wait",

"milliseconds": 5000

}

]

For each additional category:

{

"includeTags": [

".gap-3"

],

"actions": [

{

"type": "executeJavascript",

"script": "document.querySelectorAll(\"div.webkit-tap-transparent.cursor-pointer\")[1].click();"

},

{

"type": "executeJavascript",

"script": "document.querySelectorAll(\"div.cursor-pointer.justify-between\").forEach(element => { element.click(); });"

},

{

"type": "wait",

"milliseconds": 5000

}

]

}